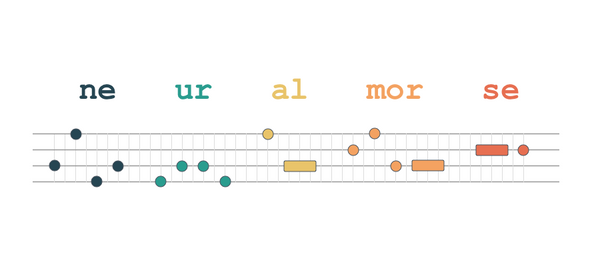

In deep natural language processing (NLP), the input text is often broken into "subword"—units that are shorter than words. In this article, we'll review common subword tokenization techniques including WordPiece, byte-pair encoding (BPE), and SentencePiece,