Pretrained language models (PLMs) such as BERT and RoBERTa are used for solving more and more natural language processing (NLP) applications in many languages, including Japanese. Companies and research institutions in Japan have been publishing many variants of pretrained BERT models that differ in details such as the type and size of the training data and the tokenization methods. A simple Google search can find you several such BERT pretrained models. This situation makes it really difficult for you, as an average practitioner who's interested in applying BERT to your Japanese NLP task, to choose the right one for you, especially if you don't read Japanese.

In this post, I'm going to compare various Japanese pretrained BERT models and their task performance and make a specific recommendation as of this writing (Januray 2021).

Compared models

Here's the list of all the pretrained BERT/DistilBERT models I used and compared in this post:

- (Google original ver.) BERT-Base, Multilingual Cased (Link)

- (Kikuta ver.) BERT with SentencePiece for Japanese text (Link)

- (Kyoto Univ. ver.) BERT Japanese Pretrained Model (BASE w/ WWM) (Link)

- (Kyoto Univ. ver.) BERT Japanese Pretrained Model (LARGE w/ WWM) (Link)

- (Tohoku Univ. ver.) Pretrained Japanese BERT models (IPADic + BPE + WWM) (Link)

- (Laboro.AI ver.) Laboro BERT Japanese (LARGE) (Link)

- (NICT ver.) NICT BERT Japanese Pre-trained Model (w/o BPE, 100k vocab.) (Link)

- (NICT ver.) NICT BERT Japanese Pre-trained Model (w/ BPE, 32k vocab.) (Link)

- (BandaiNamco ver.) Japanese DistilBERT Pretrained Model (Link)

I used the following three tasks, which are popular ones for evaluating Japanese NLP models and have freely available datasets:

- Reading comprehension with answerability dataset (RCQA) (Link)

- Livedoor news corpus (LDCC) (Link)

- Driving domain QA datasets (DDQA) (Link)

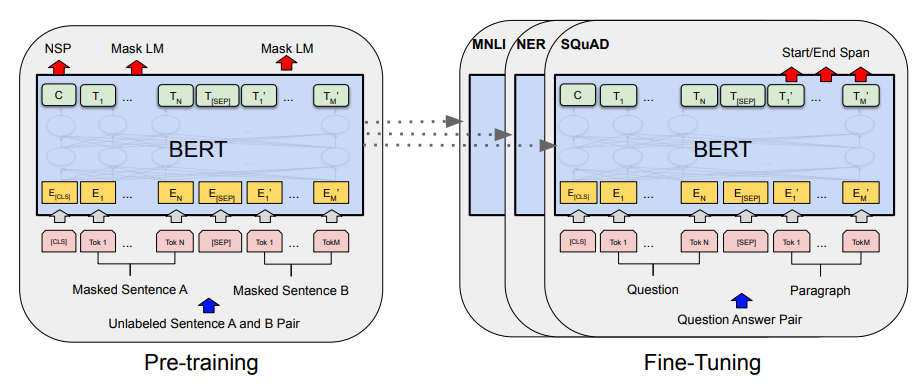

RCQA and DDQA are SQuAD-style reading comprehension tasks, where a system is given a context (a passage, often from Wikipedia) and a question, and identifies the answer to the question as a span in the context. LDCC is a document classification task. I fine-tuned the nine pretrained models above with these datasets, found the best combination of hyperparameters on their dev sets, and evaluated on the test set.

Experiments

I'm not going into the details of the experiments in this post—I'll just point interested readers to NICT's experiment page where they detailed how they evaluated their pretrained model on the RCQA dataset. The steps should be easy to follow even if you don't read Japanese. You can download the code I used for this experiment from the resources page (you need to sign up for free membership).

Here are some additional details of the experiments:

- To non Japanese speakers: note that you need to install MeCab and its Juman dictionary manually before trying to reproduce the experiments. You need to add a

--with-charset=utf8option when you run./configurewhen installing both. - I used PyTorch to fine-tune and evaluate all the models. If a model only provides a TensorFlow checkpoint, I converted it with transformers-cli.

- It'd be a lot of work and bug-prone if you wrote fine-tuning and evaluation scripts for all these tasks. I used HuggingFace Transformer's run_squad.py script for reading comprehension tasks and run_glue.py for document classification.

- I ran a grid search over the effective batch size (16, 32), learning rate (5e-5, 2e-5), and the number of epochs (2, 3) on the dev set to determine the best combination of hyperparameters. Note that these ranges are smaller than the ones used in the NICT's experiment due to the time constraint and because of that, the specific evaluation metrics can fluctuate slightly, although I doubt it'd affect the overall ranking of the models evaluated here.

- For RCQA, I used the same train/dev/test splits as the NICT's experiment. For LDCC, I shuffled the dataset and split into 8:1:1 train/dev/test splits. Because including the titles gives too much information about the documents being classified, I only included the document body (and not the titles) when preprocessing the dataset.

- I used CUDA version 10.2. If you do, you need to specify

cudatoolkit=10.2when you create a new conda environment. I installed apex from the latest master.

Results

Here's the evaluation results of model comparison:

| RCQA Exact | RCQA F1 | LDCC Acc | LDCC F1 | DDQA Exact | DDQA F1 | MODEL | |

|---|---|---|---|---|---|---|---|

| Google original ver. | 69.93 | 69.99 | 84.51 | 84.15 | 61.62 | 61.62 | multi_cased_L-12_H-768_A-12 |

| Kikuta ver. | 73.24 | 76.30 | 91.58 | 91.23 | 74.71 | 85.29 | bert-wiki-ja |

| Kyoto Univ. ver. | 73.83 | 75.56 | 91.17 | 90.80 | 83.69 | 89.17 | Japanese_L-12_H-768_A-12_E-30_BPE_WWM_transformers |

| Kyoto Univ. ver. | 76.27 | 77.94 | 91.71 | 91.39 | 86.72 | 91.20 | Japanese_L-24_H-1024_A-16_E-30_BPE_WWM_transformers |

| Tohoku Univ. ver. | 77.37 | 78.45 | 91.17 | 90.88 | 87.99 | 92.58 | BERT-base_mecab-ipadic-bpe-32k_whole-word-mask |

| Laboro.AI ver. | 73.79 | 77.08 | 90.76 | 90.23 | 75.98 | 86.67 | laborobert |

| NICT ver. | 77.47 | 78.85 | 92.26 | 91.85 | 88.77 | 92.31 | NICT_BERT-base_JapaneseWikipedia_32K_BPE |

| NICT ver. | 75.79 | 77.31 | 91.98 | 91.75 | 87.99 | 92.42 | NICT_BERT-base_JapaneseWikipedia_100K |

| BandaiNamco ver. | 65.72 | 67.21 | 82.20 | 80.50 | 70.90 | 78.55 | DistilBERT-base-jp |

Conclusion

In short, as of this writing, I recommend using the NICT ver. (w/ BPE, 32k vocob.) — it achieves the best results overall for various tasks and its usage is well documented.

Tohoku University's version is not bad either. Their models can be available via Huggingface Transformers by default, which makes it really handy when you want to give it a try really quickly.

On the other hand, the use of Google's original version can't be recommended. It breaks up all CJK characters one by one, which is not a good tokenization method for Japanese.

Overall, pretrained models with SentencePiece tokenization (Kikuta ver. and Laboro.AI ver.) do not perform as well as the ones with morphology-based tokenization (Tohoku Univ. ver. and NICT ver.), especially for the reading comprehension tasks. For those fine-grained tasks such as reading comprehension and named entity recognition (NER), how you tokenize Japanese text matters, which might be the reason why linguistically motivated tokenization performs better.

There are many other Japanese pretrained language models that I didn't try in this post, including:

- DistilBERT by Laboro.AI

- ELECTR by ACinnamon AI

- ALBERT by Stockmark

- XLNET by Stockmark

- BERT by Stockmark

- RoBERTa by Asahi Shimbun

- hottoSNS-BERT by Hottolink

I did compare the DistilBERT model from Laboro.AI and BERT from Stockmark, but they only showed low metrics, possibly due to some issues such as mismatched vocabulary. I might give them another try in near future.

References

- 日本語でのBERT、XLNet、ALBERTとかをまとめてみた — a summary of Japanese pretrained models including BERT, XLNet, and ALBERT

- 【実装解説】日本語版BERTでlivedoorニュース分類:Google Colaboratoryで(PyTorch)

- a tutorial for classifying LDCC dataset with Japanese BERT